複数マスターを持つ高可用性Kubernetesクラスタを作る

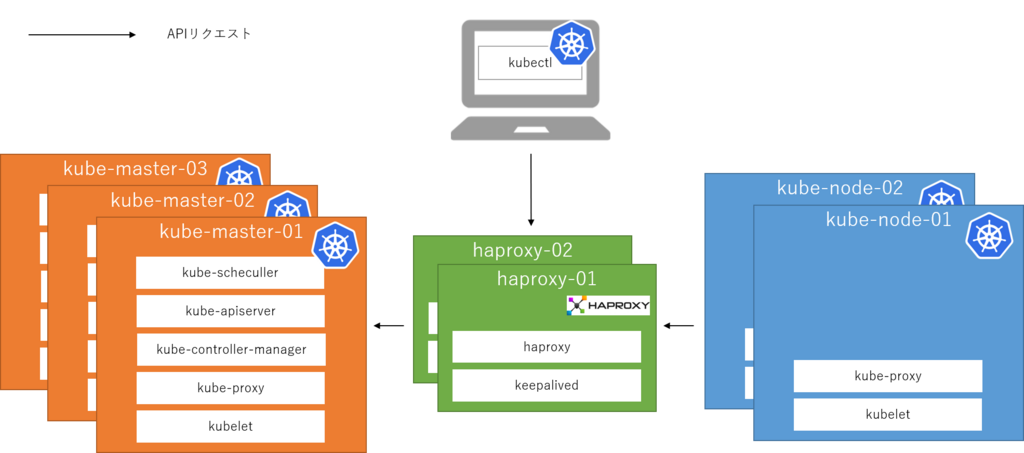

先日、keepalivedを用いてhaproxyを冗長化する記事を上げましたが、この高可用性ロードバランサを用いて複数マスターを持つ高可用性Kubernetestクラスタを作ってみたので、その手順を公開いたします。

環境

アーキテクチャはシンプルで、kubeletやkubectlのAPIリクエストを直接マスターに送るのではなく、間にhaproxyを挟むことによってマスターの冗長化を実現しています。

なお、利用OSはCentOS 7.4になります。

手順

以降、実際の手順です。

共通設定(haproxy-01, haproxy-02, kube-master-01, kube-master-02, kube-master-03, kube-node-01, kube-node-02)

- hosts設定

# vi /etc/hosts

192.168.0.82 kube-master-01.bbrfkr.mydns.jp 192.168.0.83 kube-master-02.bbrfkr.mydns.jp 192.168.0.84 kube-master-03.bbrfkr.mydns.jp 192.168.0.85 kube-node-01.bbrfkr.mydns.jp 192.168.0.86 kube-node-02.bbrfkr.mydns.jp 192.168.0.87 haproxy-01.bbrfkr.mydns.jp 192.168.0.88 haproxy-02.bbrfkr.mydns.jp

- SELinux、firewalldの無効化

検証のため、無効化します。

# sed -i 's/SELINUX=.*/SELINUX=permissive/g' /etc/selinux/config # systemctl disable firewalld # reboot

LBの構築(haproxy-*)

- haproxy、keepalivedのインストール(haproxy-01, haproxy-02)

# yum -y install haproxy keepalived

- haproxyの設定(haproxy-01, haproxy-02)

# vi /etc/haproxy/haproxy.cfg

frontend api *:6443

default_backend api

frontend etcd *:2379

default_backend etcd

backend api

balance leastconn

server master1 kube-master-01.bbrfkr.mydns.jp:6443 check

server master2 kube-master-02.bbrfkr.mydns.jp:6443 check backup

server master3 kube-master-03.bbrfkr.mydns.jp:6443 check backup

backend etcd

balance leastconn

server master1 kube-master-01.bbrfkr.mydns.jp:2379 check

server master2 kube-master-02.bbrfkr.mydns.jp:2379 check backup

server master3 kube-master-03.bbrfkr.mydns.jp:2379 check backup

- haproxyの起動と自動起動設定(haproxy-01, haproxy-02)

# systemctl enable haproxy # systemctl start haproxy

- keepalivedの設定(haproxy-01)

# vi /etc/keepalived/keepalived.conf

vrrp_script chk_haproxy {

script "systemctl is-active haproxy"

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 1

priority 101

virtual_ipaddress {

192.168.0.89

}

track_script {

chk_haproxy

}

}

- keepalivedの設定(haproxy-02)

# vi /etc/keepalived/keepalived.conf

vrrp_script chk_haproxy {

script "systemctl is-active haproxy"

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 1

priority 100

virtual_ipaddress {

192.168.0.89

}

track_script {

chk_haproxy

}

}

- keepalivedの起動と自動起動設定(haproxy-01, haproxy-02)

# systemctl enable keepalived # systemctl start keepalived

Dockerのインストール(kube-*)

- パッケージのインストール

# yum -y install docker

- コンテナボリューム用ディスクのセットアップ

# cat <<EOF > /etc/sysconfig/docker-storage-setup DEVS=/dev/vda VG=docker-vg EOF # docker-storage-setup

- ヘアピンNATの有効化(これをしないと、Podが自分自身のServiceにアクセスできなくなる...)

# sed -i "s/OPTIONS='/OPTIONS='--userland-proxy=false /g" /etc/sysconfig/docker

- サービスの起動と自動起動設定

# systemctl enable docker # systemctl start docker

etcdのインストール(kube-master-*)

- パッケージのインストール(kube-master-*)

# yum -y install etcd

- 設定ファイルの書き換え(kube-master-01)

# vi /etc/etcd/etcd.conf

#[Member] ETCD_NAME=kube-master-01 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="http://192.168.0.82:2380" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.82:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.82:2379" ETCD_INITIAL_CLUSTER="kube-master-01=http://192.168.0.82:2380,kube-master-02=http://192.168.0.83:2380,kube-master-03=http://192.168.0.84:2380" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-for-kubernetes"

- 設定ファイルの書き換え(kube-master-02)

# vi /etc/etcd/etcd.conf

#[Member] ETCD_NAME=kube-master-02 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="http://192.168.0.83:2380" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.83:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.83:2379" ETCD_INITIAL_CLUSTER="kube-master-01=http://192.168.0.82:2380,kube-master-02=http://192.168.0.83:2380,kube-master-03=http://192.168.0.84:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-for-kubernetes"

- 設定ファイルの書き換え(kube-master-03)

# vi /etc/etcd/etcd.conf

#[Member] ETCD_NAME=kube-master-03 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="http://192.168.0.84:2380" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.84:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.84:2379" ETCD_INITIAL_CLUSTER="kube-master-01=http://192.168.0.82:2380,kube-master-02=http://192.168.0.83:2380,kube-master-03=http://192.168.0.84:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-for-kubernetes"

- サービスの起動と自動起動設定(kube-master-02, kube-master-03)

# systemctl enable etcd # systemctl start etcd

- サービスの起動と自動起動設定(kube-master-01)

# systemctl enable etcd # systemctl start etcd

- クラスタの状態確認(kube-master-01)

# etcdctl member list # etcdctl cluster-health

- コンテナ用ネットワーク設定作成(kube-master-01)

# etcdctl mkdir /ha-kubernetes/network

# etcdctl mk /ha-kubernetes/network/config '{ "Network": "10.1.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" } }'

flannelのインストール - master編 - (kube-master-*)

- wgetのインストール

# yum -y install wget

- バイナリのダウンロード・展開と配置

# mkdir flanneld && cd flanneld # wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz # tar xvzf flannel-v0.10.0-linux-amd64.tar.gz # mv flanneld mk-docker-opts.sh /usr/bin

- ごみ掃除

# cd .. # rm -rf flanneld

- systemdファイル作成

# vi /usr/lib/systemd/system/flanneld.service

[Unit] Description=Flanneld overlay address etcd agent After=network.target After=network-online.target Wants=network-online.target After=etcd.service Before=docker.service [Service] Type=notify EnvironmentFile=/etc/sysconfig/flanneld EnvironmentFile=-/etc/sysconfig/docker-network ExecStart=/usr/bin/flanneld-start $FLANNEL_OPTIONS ExecStartPost=/usr/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker Restart=on-failure [Install] WantedBy=multi-user.target RequiredBy=docker.service

# touch /usr/bin/flanneld-start # chown root:root /usr/bin/flanneld-start # chmod 755 /usr/bin/flanneld-start # vi /usr/bin/flanneld-start

#!/bin/sh

exec /usr/bin/flanneld \

-etcd-endpoints=${FLANNEL_ETCD_ENDPOINTS:-${FLANNEL_ETCD}} \

-etcd-prefix=${FLANNEL_ETCD_PREFIX:-${FLANNEL_ETCD_KEY}} \

"$@"

# mkdir /usr/lib/systemd/system/docker.service.d # vi /usr/lib/systemd/system/docker.service.d/flannel.conf

[Service] EnvironmentFile=-/run/flannel/docker

- 設定ファイルの編集

# vi /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://127.0.0.1:2379" FLANNEL_ETCD_PREFIX="/ha-kubernetes/network" #FLANNEL_OPTIONS=""

- サービスの起動と自動起動設定

# systemctl enable flanneld # systemctl start flanneld

- dockerサービス再起動

# systemctl restart docker

flannelのインストール - node編 - (kube-node-*)

- wgetのインストール

# yum -y install wget

- バイナリのダウンロード・展開と配置

# mkdir flanneld && cd flanneld # wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz # tar xvzf flannel-v0.10.0-linux-amd64.tar.gz # mv flanneld mk-docker-opts.sh /usr/bin

- ごみ掃除

# cd .. # rm -rf flanneld

- systemdファイル作成

# vi /usr/lib/systemd/system/flanneld.service

[Unit] Description=Flanneld overlay address etcd agent After=network.target After=network-online.target Wants=network-online.target After=etcd.service Before=docker.service [Service] Type=notify EnvironmentFile=/etc/sysconfig/flanneld EnvironmentFile=-/etc/sysconfig/docker-network ExecStart=/usr/bin/flanneld-start $FLANNEL_OPTIONS ExecStartPost=/usr/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker Restart=on-failure [Install] WantedBy=multi-user.target RequiredBy=docker.service

# touch /usr/bin/flanneld-start # chown root:root /usr/bin/flanneld-start # chmod 755 /usr/bin/flanneld-start # vi /usr/bin/flanneld-start

#!/bin/sh

exec /usr/bin/flanneld \

-etcd-endpoints=${FLANNEL_ETCD_ENDPOINTS:-${FLANNEL_ETCD}} \

-etcd-prefix=${FLANNEL_ETCD_PREFIX:-${FLANNEL_ETCD_KEY}} \

"$@"

# mkdir /usr/lib/systemd/system/docker.service.d # vi /usr/lib/systemd/system/docker.service.d/flannel.conf

[Service] EnvironmentFile=-/run/flannel/docker

- 設定ファイルの編集

# vi /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.0.89:2379" FLANNEL_ETCD_PREFIX="/ha-kubernetes/network" #FLANNEL_OPTIONS=""

- サービスの起動と自動起動設定

# systemctl enable flanneld # systemctl start flanneld

- dockerサービス再起動

# systemctl restart docker

kubernetesの設定 - master編 - (kube-master-*)

- バイナリのダウンロード

# curl -O https://storage.googleapis.com/kubernetes-release/release/v1.9.1/kubernetes-server-linux-amd64.tar.gz

- tarボールの展開

# tar xvzf kubernetes-server-linux-amd64.tar.gz

- 不要物の削除

# rm -f kubernetes/server/bin/*.*

- バイナリの移動

# mv kubernetes/server/bin/* /usr/bin/

- ゴミ掃除

# rm -rf kubernetes*

- kubeユーザの作成

# groupadd --system kube

# useradd \

--home-dir "/home/kube" \

--system \

--shell /bin/false \

-g kube \

kube

# mkdir -p /home/kube

# chown kube:kube /home/kube

- kubernetes設定ファイル用ディレクトリ作成

# mkdir -p /etc/kubernetes # chown kube:kube /etc/kubernetes

- master用systemdファイル配置

# vi /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/apiserver

User=kube

ExecStart=/usr/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_PORT \

$KUBE_API_ADDRESS \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# vi /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/controller-manager

User=kube

ExecStart=/usr/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# vi /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/scheduler

User=kube

ExecStart=/usr/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

- master用設定ファイル配置

# vi /etc/kubernetes/config

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=true"

# vi /etc/kubernetes/apiserver

### # kubernetes system config # # The following values are used to configure the kube-apiserver # # The address on the local server to listen to. KUBE_API_ADDRESS="--insecure-bind-address=127.0.0.1 --bind-address=0.0.0.0" # The port on the local server to listen on. KUBE_API_PORT="--insecure-port=8080 --secure-port=6443" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://127.0.0.1:2379" # Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=172.16.0.0/16" # default admission control policies KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" # Add your own! KUBE_API_ARGS="--apiserver-count 3 --cert-dir /etc/kubernetes/certs --basic-auth-file=/etc/kubernetes/basic-auth-list.csv --authorization-mode=ABAC --authorization-policy-file=/etc/kubernetes/abac-policy.json"

# vi /etc/kubernetes/controller-manager

### # The following values are used to configure the kubernetes controller-manager # defaults from config and apiserver should be adequate # Add your own! KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --service-account-private-key-file=/etc/kubernetes/certs/apiserver.key"

# vi /etc/kubernetes/scheduler

### # kubernetes scheduler config # default config should be adequate # Add your own! KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig"

# vi /etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

server: https://127.0.0.1:6443

insecure-skip-tls-verify: true

name: ha-kubernetes

contexts:

- context:

cluster: ha-kubernetes

user: kube-process

name: kube-process-to-ha-kubernetes

current-context: kube-process-to-ha-kubernetes

users:

- name: kube-process

user:

username: kube-process

password: password

# vi /etc/kubernetes/abac-policy.json

{"apiVersion": "abac.authorization.kubernetes.io/v1beta1", "kind": "Policy", "spec": {"group":"system:authenticated", "nonResourcePath": "*", "readonly": true}}

{"apiVersion": "abac.authorization.kubernetes.io/v1beta1", "kind": "Policy", "spec": {"group":"system:unauthenticated", "nonResourcePath": "*", "readonly": true}}

{"apiVersion": "abac.authorization.kubernetes.io/v1beta1", "kind": "Policy", "spec": {"user":"admin", "namespace": "*", "resource": "*", "apiGroup": "*" }}

{"apiVersion": "abac.authorization.kubernetes.io/v1beta1", "kind": "Policy", "spec": {"user":"kube-process", "namespace": "*", "resource": "*", "apiGroup": "*" }}

# vi /etc/kubernetes/basic-auth-list.csv

password,admin,1 password,kube-process,2

- 設定ファイル権限変更

# chown kube:kube /etc/kubernetes/*

- パスワードファイルセキュリティ対策

# chmod 600 /etc/kubernetes/basic-auth-list.csv

- OpenSSLの設定ファイル「openssl.cnf」のコピー(kube-master-01のみ)

# cd # cp /etc/pki/tls/openssl.cnf .

- openssl.cnfにSANの設定を入れる(kube-master-01のみ)

# sed -i 's/\[ v3_ca \]/\[ v3_ca \]\nsubjectAltName = @alt_names/g' openssl.cnf # cat <<EOF >> openssl.cnf [ alt_names ] DNS.1 = kubernetes.default.svc DNS.2 = kubernetes.default DNS.3 = kubernetes DNS.4 = localhost IP.1 = 192.168.0.89 IP.2 = 172.16.0.1 EOF

- APIサーバ用鍵の作成(kube-master-01のみ)

# openssl genrsa 2048 > apiserver.key

- CSRの作成(kube-master-01のみ)

# openssl req -new -key apiserver.key -out apiserver.csr

# openssl x509 -days 7305 -req -signkey apiserver.key -extensions v3_ca -extfile ./openssl.cnf -in apiserver.csr -out apiserver.crt

- 作成した自己署名証明書と鍵の配置と配布(kube-master-01のみ)

# cp apiserver* /etc/kubernetes/certs # scp apiserver* 192.168.0.83:/etc/kubernetes/certs # scp apiserver* 192.168.0.84:/etc/kubernetes/certs

- パーミッション設定

# chmod 600 /etc/kubernetes/certs/apiserver.key # chown kube:kube /etc/kubernetes/certs/apiserver*

- ごみ掃除(kube-master-01のみ)

# rm -f apiserver.* # rm -f openssl.cnf

- service自動起動設定&起動

# systemctl enable kube-apiserver kube-scheduler kube-controller-manager # systemctl start kube-apiserver # systemctl start kube-scheduler kube-controller-manager

kubernetesの設定 - node編 1 - (kube-master-*)

- swapの無効化

# vi /etc/fstab # reboot

- kubelet用ディレクトリ作成

# mkdir -p /var/lib/kubelet # chown root:root /var/lib/kubelet

- node用systemdファイル配置

# vi /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

# vi /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

- node用設定ファイル配置

# vi /etc/kubernetes/kubelet

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname # KUBELET_HOSTNAME="--hostname-override=127.0.0.1" KUBELET_HOSTNAME="" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0" # Add your own! KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --cgroup-driver=systemd --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice"

# vi /etc/kubernetes/proxy

### # kubernetes proxy config # default config should be adequate # Add your own! KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --cluster-cidr=172.16.0.0/16"

- 設定ファイル権限変更

# chown kube:kube /etc/kubernetes/*

- service自動起動設定&起動(node)

# systemctl enable kubelet kube-proxy # systemctl start kubelet kube-proxy

kubernetesの設定 - node編 2 - (kube-node-*)

- バイナリのダウンロード

# curl -O https://storage.googleapis.com/kubernetes-release/release/v1.9.1/kubernetes-node-linux-amd64.tar.gz

- tarボールの展開

# tar xvzf kubernetes-node-linux-amd64.tar.gz

- 不要物の削除

# rm -f kubernetes/node/bin/*.*

- バイナリの移動

# mv kubernetes/node/bin/* /usr/bin/

- ゴミ掃除

# rm -rf kubernetes*

- swapの無効化(node)

# vi /etc/fstab # reboot

- kubeユーザの作成

# groupadd --system kube

# useradd --home-dir "/home/kube" \

--system \

--shell /bin/false \

-g kube \

kube

# mkdir -p /home/kube

# chown kube:kube /home/kube

- kubelet用ディレクトリ作成

# mkdir -p /var/lib/kubelet # chown root:root /var/lib/kubelet

- kubernetes設定ファイル用ディレクトリ作成

# mkdir -p /etc/kubernetes # chown kube:kube /etc/kubernetes

- node用systemdファイル配置

# vi /lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

# vi /lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

- node用設定ファイル配置

# vi /etc/kubernetes/config

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=true"

# vi /etc/kubernetes/kubelet

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname # KUBELET_HOSTNAME="--hostname-override=127.0.0.1" KUBELET_HOSTNAME="" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0" # Add your own! KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --cgroup-driver=systemd --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice"

# vi /etc/kubernetes/proxy

### # kubernetes proxy config # default config should be adequate # Add your own! KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --cluster-cidr=172.16.0.0/16"

# vi /etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

server: https://192.168.0.89:6443

insecure-skip-tls-verify: true

name: ha-kubernetes

contexts:

- context:

cluster: ha-kubernetes

user: kube-process

name: kube-process-to-ha-kubernetes

current-context: kube-process-to-ha-kubernetes

users:

- name: kube-process

user:

username: kube-process

password: password

- 設定ファイル権限変更

# chown kube:kube /etc/kubernetes/*

- service自動起動設定&起動

# systemctl enable kubelet kube-proxy # systemctl start kubelet kube-proxy